Over the last ten years or so, I’ve written over 100 reviews for Silent Radio (woohoo!), but I feel increasingly out of touch with modern music (and reality) as I listen to a passable Frank Sinatra impersonation with full backing band singing “On Christmas day / a true unholy beast / went down to hell / with human flesh for sacrifice / WITH HUMAN FLESH FOR SACRIFICE”.

While the winter solstice may have some dark history, this imagery arose not deliberately from the dark depths of the human mind but was “written” – as the music was – by an artificial intelligence, part of the OpenAI project. Also, who am I trying to kid? This is not out of my comfort zone in any way – I love Richard Cheese and the Residents, so this is right up my darkened alley.

Listening to these bizarre computer-generated tracks is like being in an episode of the Twilight Zone where humans, kidnapped by aliens, find themselves in what looks exactly like an apartment but where none of the boxes of cereal or bags of crisps actually come open – they’re just solid shapes – and you can’t pick up the soap because it’s part of the soap dish… which is part of the sink…

If you’re not paying attention (or if you’re my next door neighbours, hearing through the walls), this would probably just-about pass for “real” music – but on closer inspection it’s all jarring and unsettling for so many, many reasons.

MuseNet Logo

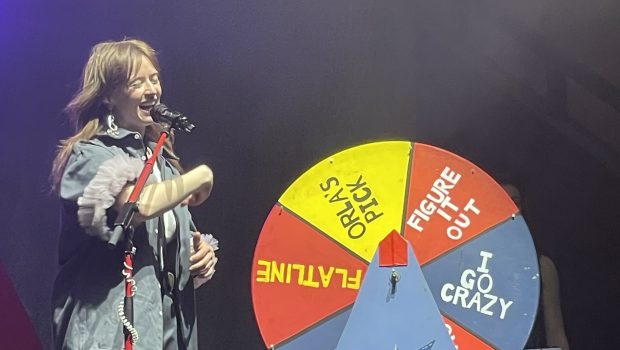

I trawled OpenAI’s year-long back catalogue and found it started learning from MIDI compositions as a program called MuseNet. MuseNet starts out with Mozart, mimicking passable child-genius compositions, then progresses to Rachmaninoff and churns out a credible facsimile of a cat running on a piano, then what sounds exactly like some super-fast piano playing just cut up and re-arranged at random. But very quickly, MuseNet developed into a credible tool for producing anodyne, soulless muzak to match anything a mid-80s New York department store perfume department had to offer – and this tool is now available to all.

Is it a tool for music makers?

The technology is not totally there yet, but I can definitely see future iterations being built into the Garage Band, Logic Pro and ProTools of the future, to be used by beginners or that special breed of “high-productivity, low personality” musicians or those without formal musical education who have need of original compositions for whatever reason. Think of it like the “demo” button on an old Casio keyboard, but for the 21st century.

It’s for people who regularly produce throwaway soundtracks – not for people who produce deeply personal albums. It’s for people who are starting out as singers or lyric writers and want original compositions to work on to improve their skills. It’s for people with something to say musically but who don’t have the musical education to know “what chord should come next?” It’s for musicians who want unique royalty-free “samples” and it’s a big extension of what’s already possible in taking a melody or an audio recording of your own song and getting a virtual “band” to back it up.

It remains to be seen over the coming years whether we see this tool result in a development of urban music into more harmonically diverse forms and/or a stultification of more classically-influenced genres into cookie-cutter “perfect” chord progressions – but I think wherever humans still make music, there will always be someone looking to make something that sounds different to everything else. The very nature of making music worth listening to lies in achieving the right balance between the predictable and the unpredictable. I think at its best, this technology will act as a guide – an advisor that can be followed or ignored and tweaked based on our own tastes and preferences.

What we are not looking at is the new 808 that will usher in a distinctive new sound or genre of music. However, what the development of OpenAI represents in music is likely to be even more revolutionary in the long run, further devaluing music financially and ushering in an era of almost limitless effectively “licence free” music, which could be used as backing for anything from YouTube channels to low-budget films to video games – and which will incidentally further crowd out independent releases.

There are situations where original auto-generated content would be perfect: the technology would solve a few problems in computer games (including apps): firstly, providing an option for small-scale developers who cannot afford to pay to licence music; secondly in overcoming the problem where repeating sections of a game or spending long periods in the same area means that the same music is repeated over and over. This can really grind a player down.

The video-game industry has been using “procedural generation” to create random landscapes since 1990s titles like Scorched Earth and Worms, and there are currently companies creating “deeply adaptive” music to solve the second problem. There now seem to be few barriers left to generating a non-repeating “radio station” in a video game, without ever licensing a song… or to simulating a unique local band as part of a storyline, based on the player’s own musical preferences… or even to creating an infinite number of new tracks or “levels” for Rocksmith or Guitar Hero. Future interactive TV (like Black Mirror Bandersnatch) could potentially use this “never the same” approach to make its content seem more original on each repeat play.

I guess I can even see teams of songwriters using tools like this for screening new ideas, trying out new arrangements of their own songs or incorporating or tweaking output from the model into original compositions. The possibility of telling the tool to “make a country-and-western remix of this song” is already within believable reach.

The traditional songwriting process, much as the recording process already has, will become available to millions more people, rather than just those with a musical education. It is being “democratised” – a term that nowadays seems to be applied when someone is losing their income – much in the same way as the automation of work in the Industrial Revolution.

Would Karl Marx be dancing, rather than spinning in his grave, given that MuseNet is open source, therefore available to every rich kid with a Mac? I think he would note that it’s the means of distribution, rather than production, which represent control of the music industry (and to an extent, always has). Although OpenAI cuts out most of the recording process instantly (no studio required!) the challenge still remains as it always has been – getting people to actually listen to your tunes. The product is not the song, but the records people buy and stream and the videos they watch…

Supercharged by the advent of digital music, torrents and now the highly-successful global streaming platforms (who take micro-transactions or advertising cents on every single play, while paying a pittance for the recordings they share to multi-millions of people), we are in a situation where there is no need for an individual, workplace or venue to spend meaningful sums of money on music any more. We find ourselves a long way down the road where musicians, performers and artists are losing the ability to make a sustainable income, and songwriters too seem to be firmly in the cross-hairs.

The late 20th century saw live bands pushed out by DJs, who are now being made obsolete by bar staff, who can just plug in a phone and select a new playlist in less time than it takes to pour a pint. Given the demand for something exciting and new, I can imagine holographic bands performing from the OpenAI jukebox in a club in the 2030s (if there are any nightclubs left by then). The same sort of setup could potentially be used to replicate a one-night “tour” at dozens of venues simultaneously. However, the future I can see involves even further shifts away from music-making as a viable career for anyone other than the very-most successful, those who have broad appeal or a significant, highly-loyal fan base. Will programs like OpenAI be able to plug the skills gap and make up for the lack of innovation that has been previously created by those musicians who are afforded the time and freedom to develop and hone new techniques? To venture into new musical territories?

It seems to me that the days of big-budget prog and experimental records are gone. Record companies are looking for safe bets – reliable returns from minimal investment. At the same time, success in the mainstream music industry is so often based not on music, but on leveraging an appeal based on a non-musical level (being an idol, icon or anti-hero) to reach fans. Technical skill or the ability to produce well-written songs is desirable but not a requirement. Consistent, repeated success still remains elusive for all but a very few.

This is exactly the principle on which the X-Factor is based: winners’ success is based not on their talent per se, but on the show’s format identifying and developing the audience’s feelings of empathy with or sympathy for the artist, and by playing on the desire to be like that person (or to be with them). The appeal of rock music is based on the exact same principles, only it’s for people who prefer their music(ians) spicy as opposed to sweet.

To break into the music market, AI has a long, long way to go to develop sympathetic faces and meaningful relationships to sell its music (although an AI artist could potentially have infinite time to chat with its fans). Current attempts at AI personalities are left floundering in antisemitic chatbot twitter accounts or mired in the drudgery of a two-week blind date between a Hitler advocate and a Leeds United fan.

What’s more, so much of the AI-generated musical content is the perfect embodiment of one of my perennial complaints about human music – technically adept song-craft with absolutely no emotional content. Bill Hicks said it better.

Of course, it’s a category error to review these sounds in the way that one would review music – at this stage it’s nothing more than a hilarious and mildly disturbing technical curio. So, obviously, here’s my selected reviews:

The Led Zeppelin soundalike track (from OpenAI’s “back when they were shit” period of six months ago) epitomises my “lack of emotion” gripe perfectly. It might sound a little like the sort of arrangement Zep might fire off when warming up their amps while seriously hungover and smacked-up to the eyeballs, but those guys rocked – this is just banal in the extreme. Also, the AI is terrible at lead guitar and seems to have no idea how to rock (or be funky) – and those are all pretty big deals in my world.

OpenAI’s attempts to imitate Jack Johnson sound like a skiffle band in the 1970’s just heard their first ever reggae song, got really excited, then immediately tried to record an homage. Also, the AI seems to think that Johnson’s lyrical style is best epitomised by mumbling the lyrics of ‘Baby Shark’ really fast (it also improvs “Grandma shark”, which shows the level of creativity we are looking at right now).

‘Pop in the style of The Beatles’ is arguably more coherent than Revolution No. 9 but much less so than ‘A Day in The Life’ or ‘Helter Skelter’. It would have still sent Manson insane. The lyrical themes, strangely, also revolve around Grandma Shark. Eat that, Lennon and McCartney.

OpenAI’s “Simon and Garfunkel” is like listening to Fleet Foxes underwater… on acid. It also highlights that AI doesn’t seem to do a great job on vocal harmonies – or on intros or endings or on distinguishing between instruments (particularly cymbals) and white noise.

‘Early January… in the style of 2Pac’ mostly sounds like trying to tune in to the sounds of a riot on a radio station that is just out of area (but OpenAI still manages to drop a couple of discernible N-bombs in to the gibberish).

All of these are major stumbling blocks for making full-length tracks, more so even than song structure – although there is little evidence of repeating sections or what I would traditionally think of as musical organisation in any of the pieces I have heard. There is definitely a good facsimile of dynamics and cadence in a lot of the tracks, though.

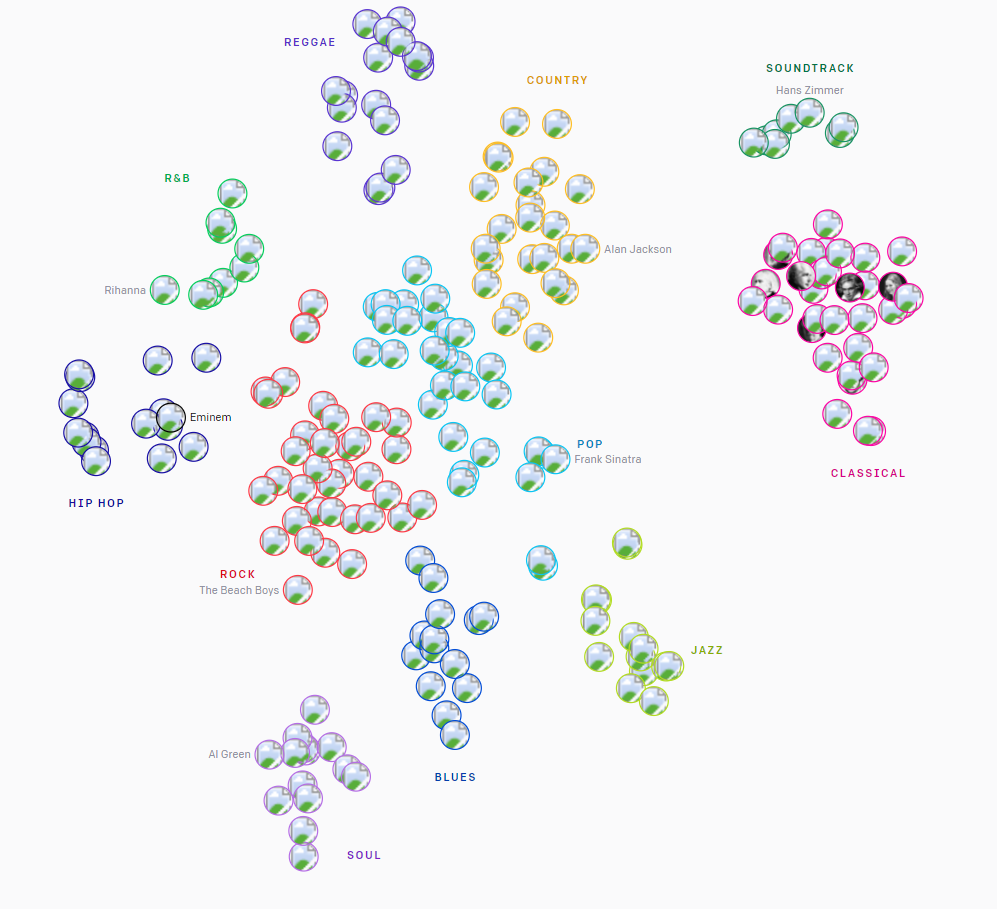

It’s interesting to note how much melody and harmony influence our perception of style – small changes in chord structure and rhythm make a lot of the genres crossover into other styles than they were “inspired” by. Pop becomes country, country becomes reggae and folk rock becomes an utter mess.

OpenAI’s artist and genre groupings – interactive map at https://openai.com/blog/jukebox/

It’s like that wonderful Bill Bailey sketch about U2 guitarist the Edge, where his expansive delay-drenched guitars suddenly suffer a technical glitch and he’s revealed to be playing Jingle Bells. One hugely interesting thing for me is that, just like driverless cars, it’s impossible to “take off the hood” of this program and unpick the decision-making processes that underlie what the program does. It is basically impossible to try to address the inconsistencies between the AI and human perceptions.

Finally, to my outstanding and overwhelming favourite: ‘Children’s music in the style of The Verve Pipe’. This should NEVER be played to children, particularly if you want them to grow up outside a mental institution. It’s like Pink Floyd and Opeth collaborating to petition Satan about the inconsistencies of food naming: “Why do we call it a hamburger if there’s no ham in it? Why do we call it a hot dog if there’s no dogs in it?”. I laugh every time I hear it.

This utterly irrelevant babbling raises the very pertinent analogy of OpenAI’s current output with vegan “meat substitutes”. Yes, it’s great: no creatures had to suffer and die in the making of this, and I celebrate that. Also, as we put in more time and effort to fake music meat, the quality will improve (and my expectation is that you won’t believe how fast – just look at the story of AlphaGo). We may even get to the point where you can say that fake music meat is “better” than real music meat – but somehow, on some level, you always just know. You just know that it’s not real. I suspect that there will always be some things you just can’t recreate. This analogy falls down when we talk about cheese, which will be the first domino to topple in OpenAI’s conquest of the music world and yet will remain to protein-hydrolysing-hippies as the fruit and water were to Tantalus in his own personal hell – forever just beyond reach.

I suspect that noise-art originators The Residents would have been delighted at OpenAI’s current output; much of the Soundcloud channel is utterly hilarious when you actually listen closely to it and yet much of it is so passably musical-sounding. It’s the audio epitome of the modern zeitgeist – utterly meaningless and fake, yet in humanity’s shallow, information-overloaded and permanently-distracted state, it’s just enough to superficially appear real and substantial.

Speaking of insubstantial: the commercial genius of the X-Factor is its trial-and-error approach (the discussion of why this approach so frequently churns out either quasi-attractive anodyne young men or voluptuous girl bands is one for another time…). To compare this with the story of AlphaGo: AI’s success in beating the world champion in arguably the world’s most complex strategy game came by supplementing analysis of the most likely move that a winning player would play with a much more nuanced and subtle approach from deep-learning networks (go on, seriously: read about it).

I believe OpenAI will develop in a similarly unexpected way, and produce genuinely interesting and surprising compositions – however, what the AI probably doesn’t have right now that the X-Factor does is the ability to carry out repeated controlled testing on large audiences of human beings. Nothing better sums up how unexpected Susan Boyle’s X-Factor success more than the fact that Simon Cowell claimed that he could see it coming – but it’s a coming together of so many tiny variables that makes the difference between someone leaving you cold and bringing a tear to your eye. Will the AI be able to nail that? I think it’s highly unlikely – but if it finds channels to “beta test” songs and personalities on large numbers of people, I think it’s possible that we could reach the landmark of an AI-generated piece of music reaching number one in the charts eventually.

However, the challenge remains overwhelming at present – it would take quite some artist to maintain any sort of credibility while simperingly emoting “why do we call it a hamburger when there’s no ham in it?”. Personally (although I know others strongly disagree this point), I couldn’t take Korn seriously after I heard the track Shoots and Ladders (on their first album) where Jonathan Davies sings nursery rhymes. That wasn’t “edgy juxtaposition”; it was just singing nursery rhymes, which wasn’t cool then and is not cool now, and won’t be cool when an AI inevitably does it in 2021.

Whatever success OpenAI has, I find it hard to believe I will ever put any of its compositions in the class of great music or to believe that AI will ever have style.

I can’t really see an AI having enough insecurity to become Roger Daltry, let alone Kurt Cobain. I don’t fancy the chances of an AI ever wondering where in the world to go next, as she smokes cigarettes and listens to jazz and aches over seemingly insurmountable personal loss in a Paris café. I can’t see her quietly walking through a forest and contemplating the inky depths of the sea and weaving the words to express her sympathy for Atlas, with the world on his shoulders.

Style is something you can’t fabricate – even if you know it when you see it. Real style has an edge; it is divisive; it’s new or subversive; it has human flesh for sacrifice.